Intro

It would be helpful to read DALL·E 2 and Stable Diffusion Model Architectures: A high level overview before moving on with this one.

DALL·E 2 is now open

You can get access and try it our for yourself at openai.com/dall-e-2.

When I was making these images, though, it had a waitlist. Immediately after getting access I ran to try it out, and the results were simply PHENOMENAL!! I wasn't expecting the kind of images output by the system. And mind you, my hopes were quite high to begin with.

BTW DALL·E 2 generates 4 images in one go, so when you enter a prompt, it will output 4 images.

Set 1: Generating OG cover images

Instead of a metallic humanoid robot holding a Rubik's cube, I thought a perfectly meta cover image for this post would be one that's generated by DALL·E 2 itself. The first prompt I gave was:

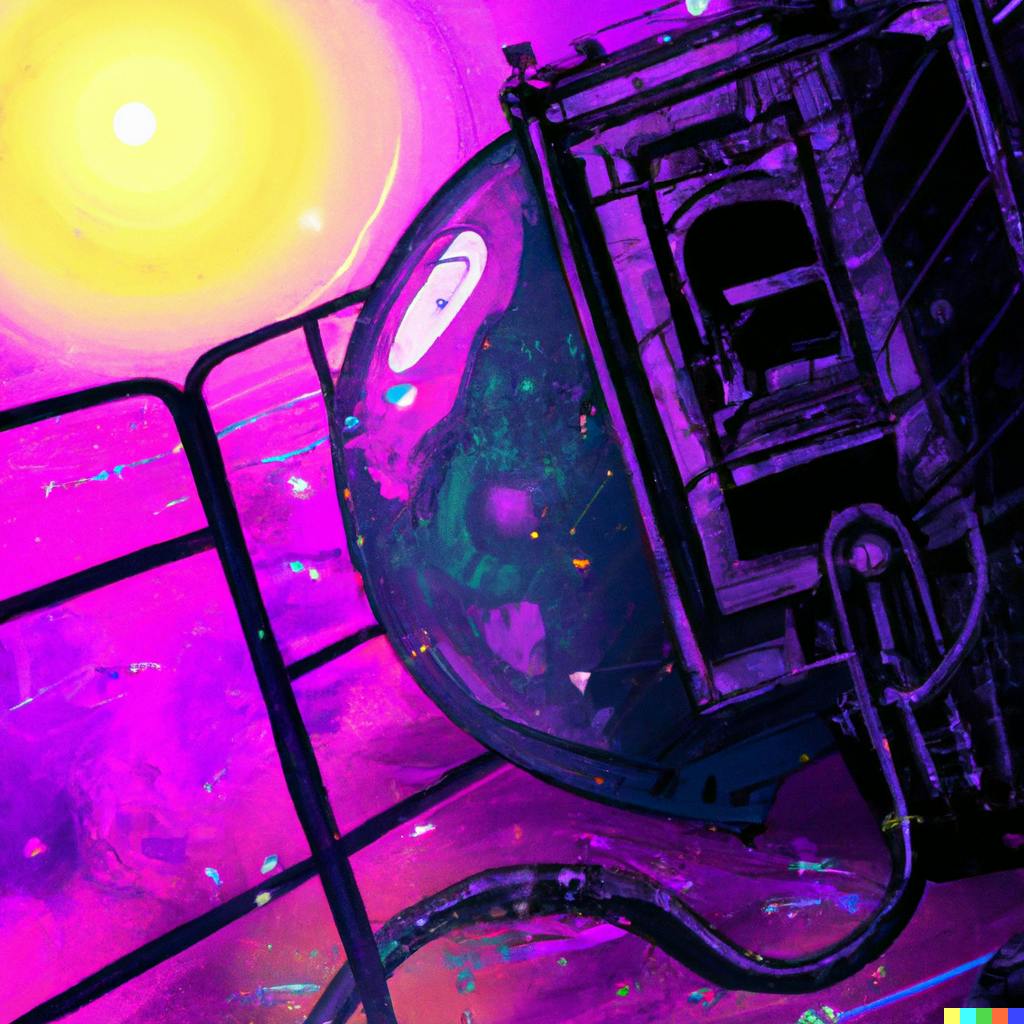

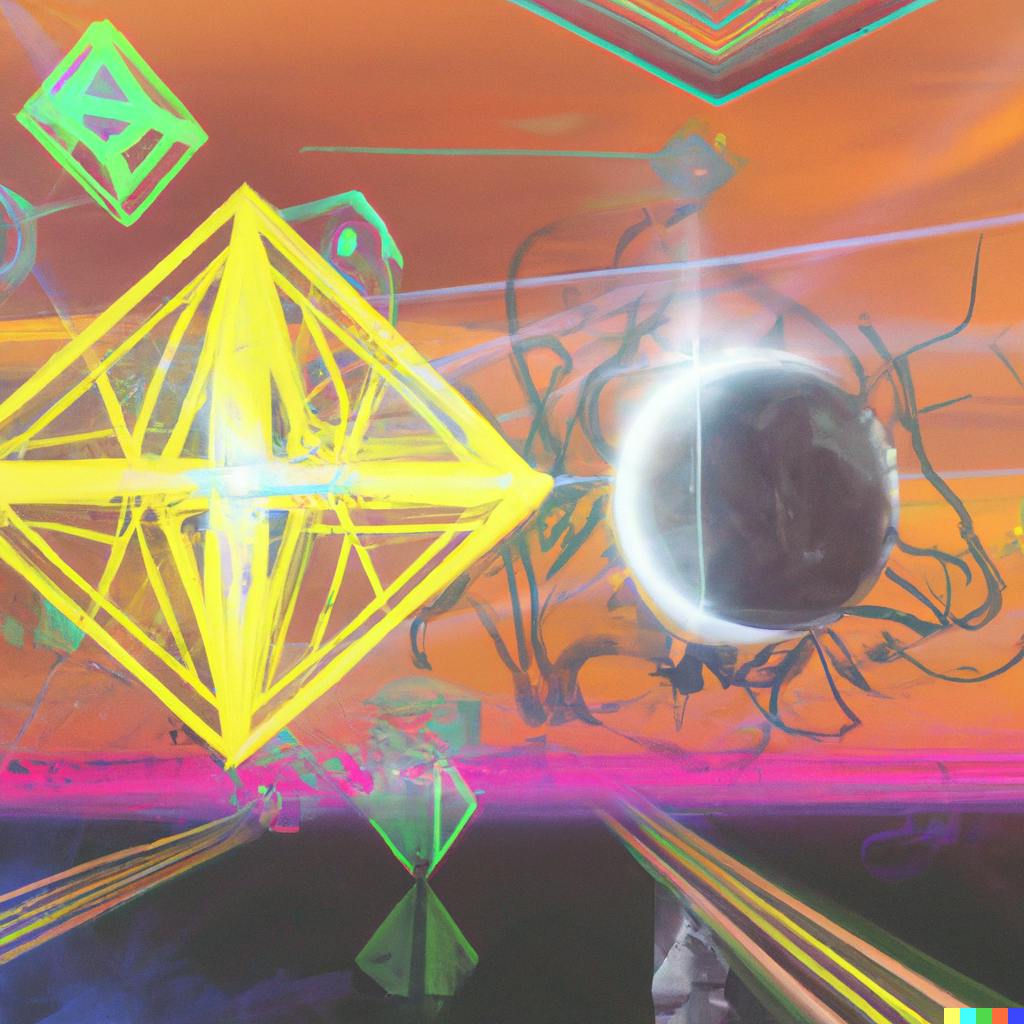

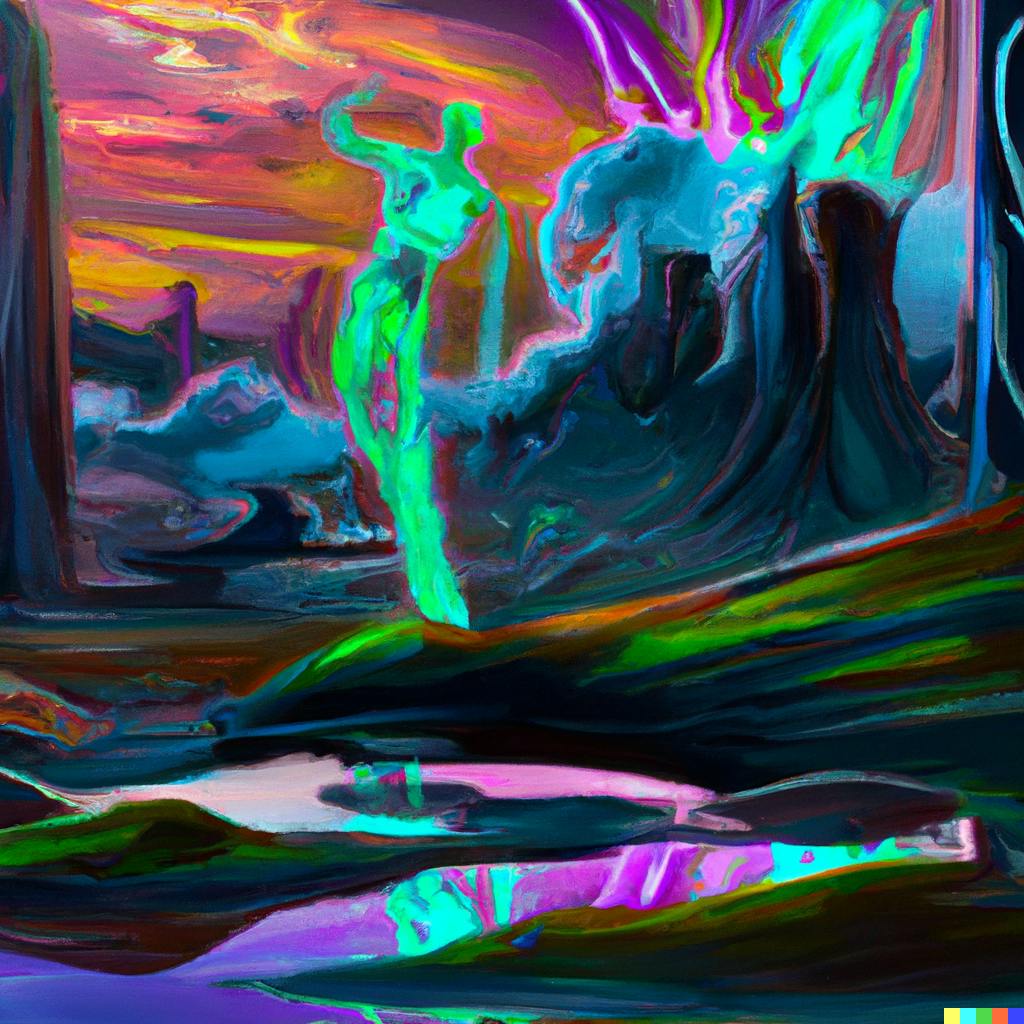

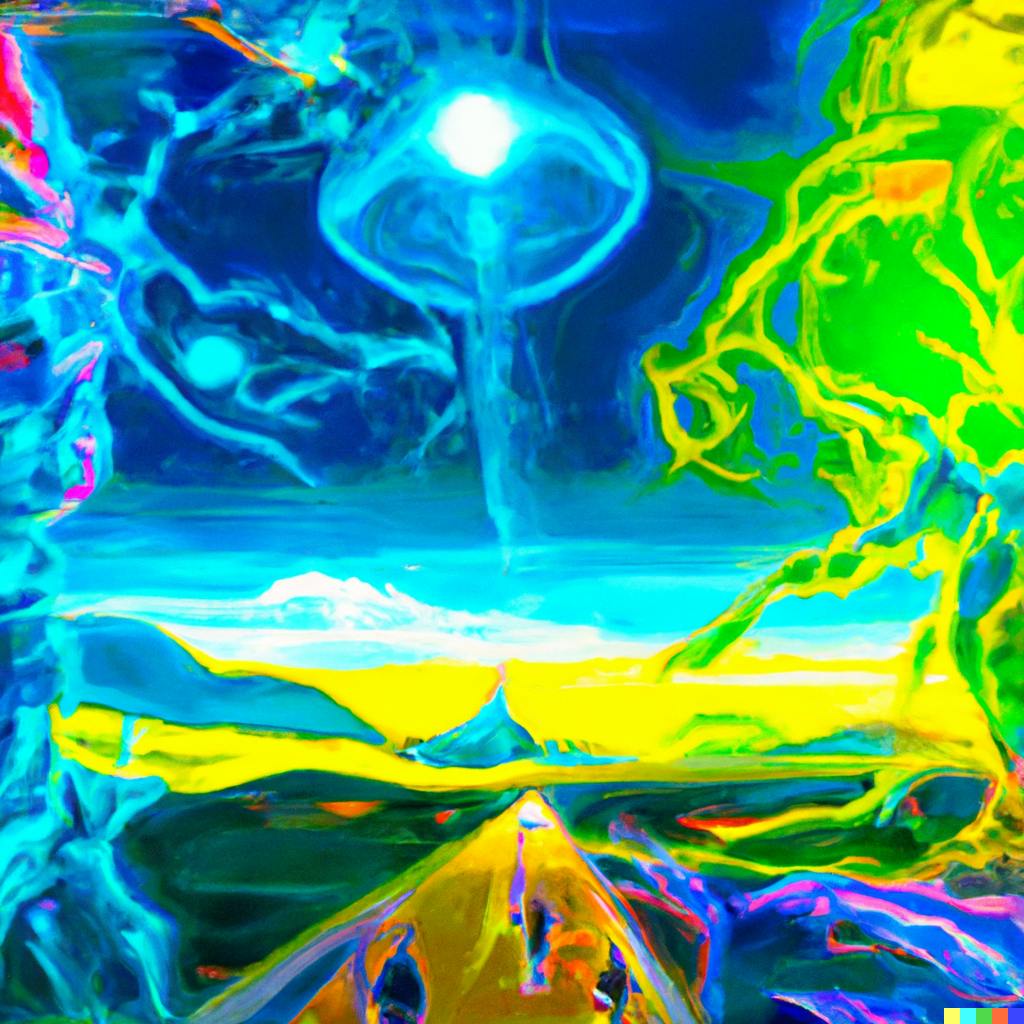

Cover image for a dall-e 2 blog post which is a colorful digital art showing a psychedelic dystopian future

And these were what came out:

This one doesn't really show psychedelic colors, nor a dystopian future, but the details, texture and clarity are amazing. DALL·E 2 seems to really capture a style, if it's mentioned. For example the way it portrays digital art through the textures and colors.

This one shows clear visual representation of each word in the prompt. You can see elements that can be construed as dystopian.

| Third One | Fourth One |

|  |

This was extremely impressive, especially for someone whose last exposure to generative imaging was CycleGAN.

While the one I used as the cover image has the best composition in my opinion, I was the most impressed by the fourth image. In Set #2, I'll tell you why.

Set 2: Variations of Set #1

DALL·E has this option where you can generate variations of an image, either uploaded by you, or an image that it created itself.

From the last set, I sequentially generated variations of each. Here are the variations of the third and fourth one:

|  |

|  |

To me, this looks like a person witnessing either the rise of an empire, or the sun setting on one, signifying an end. Hence, dystopian.

What is more impressive is the way the variations differ. While the top two don't just have different palace architectures and layouts, but the colors on them are inverted. What was teal in the first one turns reddish-pinkish-violet in the second one, and vice versa.

The bottom two images have a relative difference in perspective. The left one shows the person (or so we assume) looking at the palace from a distance, sort of an ultra-wide depiction. The palace in the right one looks nearer, as if the subject is standing inside.

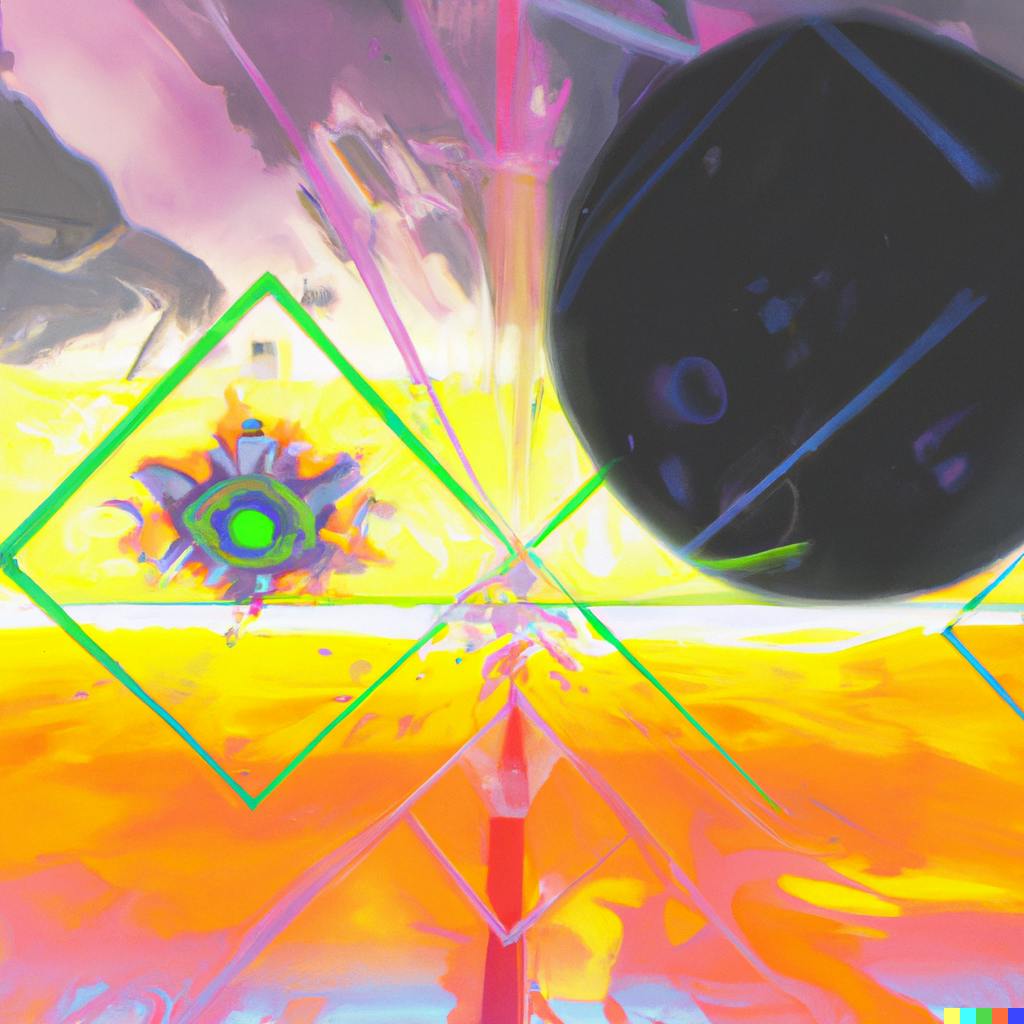

Generating variations on the fourth image, we see it venture into abstract worlds.

|  |

|  |

This set of images are not that visually pleasing, because some parts are overexposed and the blacks look greyish, etc. But the real gem is in the details.

The top left image, to me, looks like an eye looking down through the dense, dark clouds. Look at the eye, and just let that sink in for a minute. An AI, representing an eye, with just a square and a sphere.

To me, that's abstract art. It seems to know that an eye, in its fundamental form, consists of an eyeball in its enclosure, and that just opens up an uncanny avenue to explore. What other understanding, or worldly-syntax is hidden in the model's knowledge. Can such a large language model, or zero shot reasoner decipher patterns and links between real world concepts that even we, as humans, haven't figured out yet? How long will Loab keep haunting us? What more hides within the folds of its billions of parameters.

Philosophy aside, it's truly a wonder how such a black box model is able to depict such details. Representing an eye with a square and circle is not a feat in itself. But the fact that the model even has the capability to do that opens up an entirely new line of questions and experiments to find out whether making existing models large enough can lead us to artificial general intelligence?

Enough philosophy, moving on...

Set 3: DALL·E 2 on real life images

DALL·E 2 has this ability where you can upload photos and ask it to modify them. You can either generate variations (no text prompt), or you can give it a text prompt and a generation frame to make changes.

The variation generation without text prompts didn't produce anything significant when I tried.

The photos are basically the same, with minor brightness and contrast variations (maybe, or it may just be a problem with my display).

It's a bit more impressive when given a prompt, like:

Turn the water into clouds and the bridge into a fire breathing dragon which has shiny black scales

And it produces this, with the same photo as earlier.

The bridge replication is amazing. However, compared to the earlier images created solely based on a text prompt, I find this result underwhelming. It just adds what looks might like a dragon to the right, and the water is barely cloudy. Also the dragon is not breathing fire. Yes, I'm really upset about the dragon.

I'm sure the model has potential to do more. I know this because I tried running just the prompt without the image.

Sunset landscape where the water is turned into clouds and a bridge is in the shape of a fire breathing dragon which has shiny black scales

And it produces THIS!

And these...!

|  |  |

Look at those reflections! DALL·E 2 has an awesome capability to play with lights and shadows.

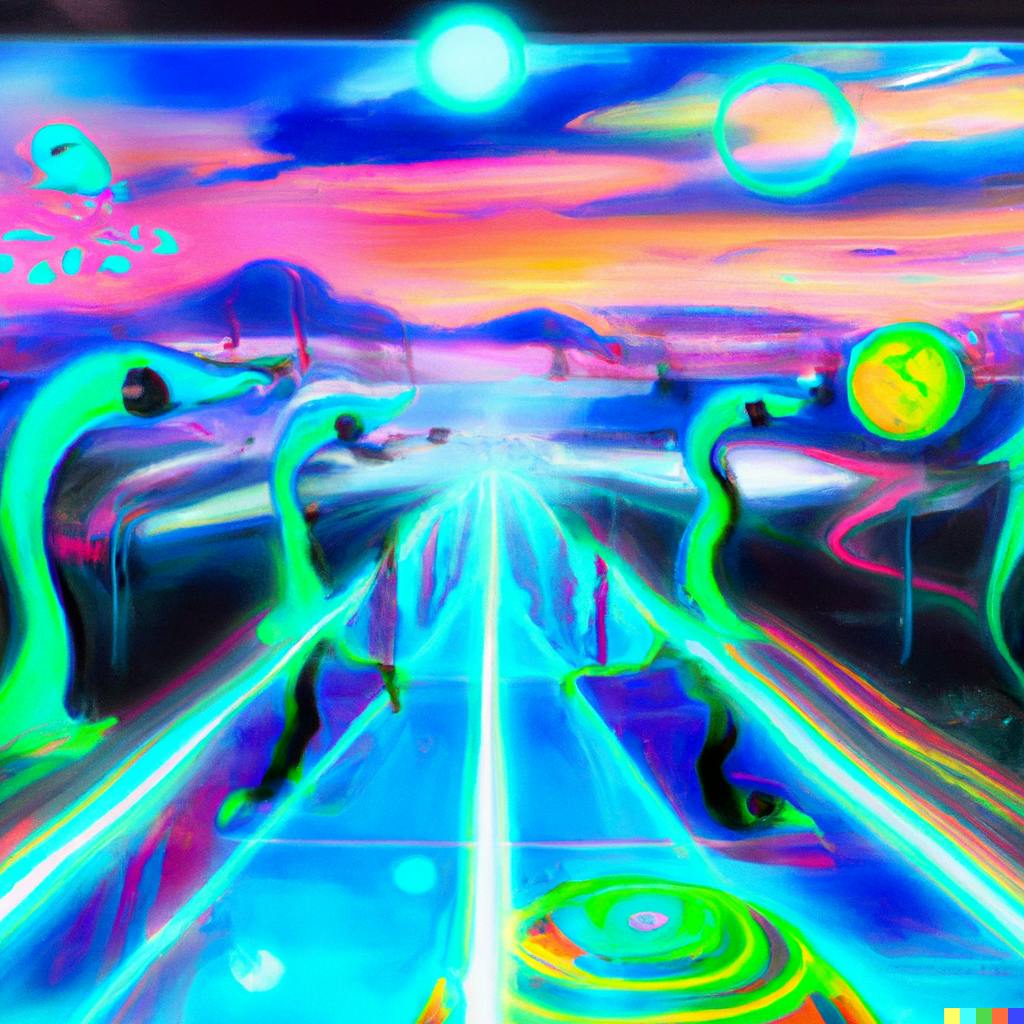

Set 4: Neon lights

I wanted to try how the model renders neon lighting effects.

A mystical landscape with neon colors bursting from the sky and strange beings descending upon the land in the style of a futuristic digital painting on a glass wall

The colors! You know when you read poetry some fleeting visuals often come to your mind, but you can't really get a grasp on what they actually are. To me, some of DALL·E 2's visuals remind me of that exact mental state.

One thing to note here is that the prompt never mentions humans. It says strange beings. The model depicts that by a humanoid figure. This shows how the model's perception is closely tied to the data it's trained on. Maybe the word beings is mostly used in the context of human beings, or maybe pop cultures' idea of a strange beings is mostly a disfigured humanoid.

This left one below shows artifacts of a real landscape, with hills, rivers and trees. The other ones are mostly fantasy.

|  |

Set 5: Questions about art

With all this impressive performance, I obviously started wondering if this can replace artists one day. And the most newsworthy thing about artists is how they sell them for millions.

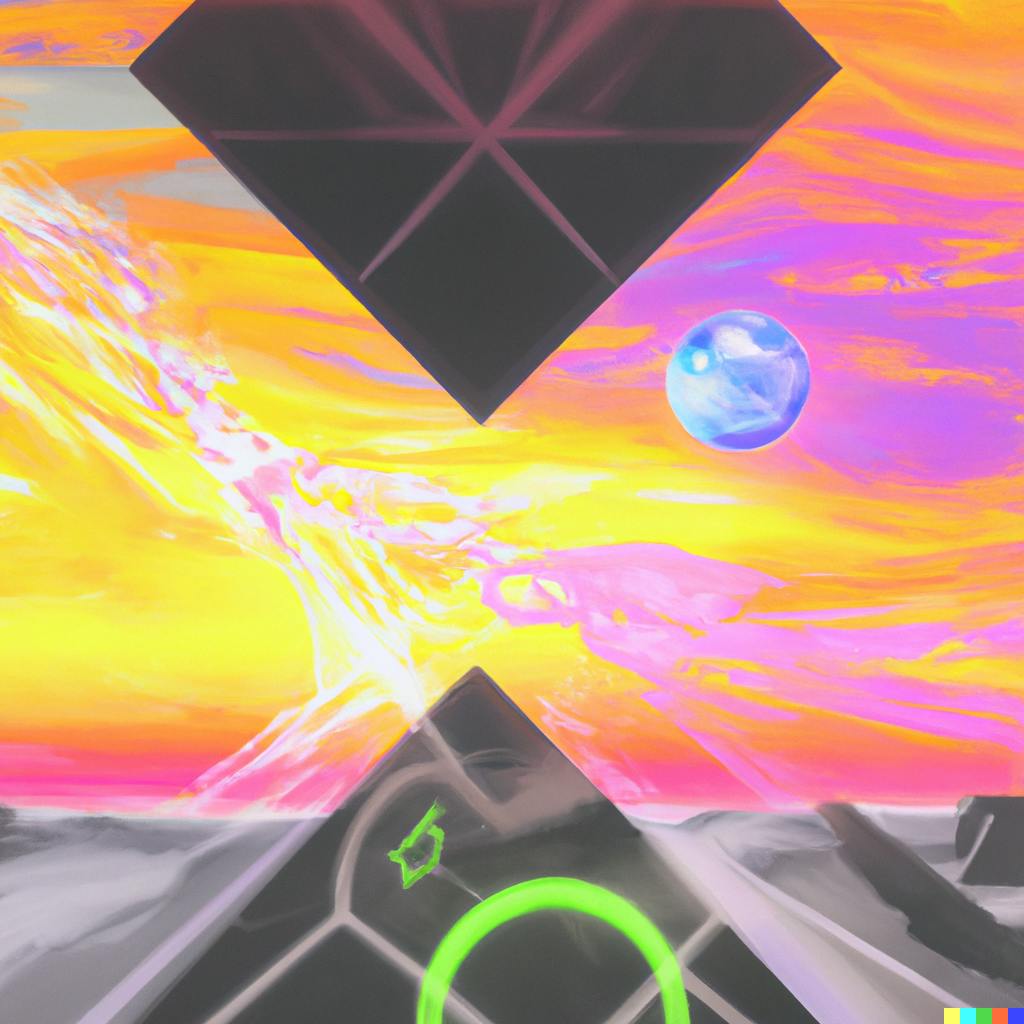

Abstract art that can be sold for millions of dollars, hung in a museum

|  |

Both of these look extremely stringy. Although I have no idea why it thinks they can be sold for millions of dollars. But we already know that the model has a sufficiently good idea about the real world. Does this mean I should proceed to sell these images?

|  |

These two look childish. Almost like some kid was playing with paint. However the question becomes why the model thinks this is what expensive art looks like.

What does all of this mean

The more I experiment with DALL·E 2, I uncover new capabilities. Discovering what more hides in it's latent space is a matter of creative exploration.

At this point, it would be good to take a step back. What even is DALL·E? It's just an image model. It's just an image model, nothing else! All it has seen throughout training is just pairs of image and its caption. With just that it can not just form relations between words and images, but can play around with it. It can change relative positions, styles, colors, everything...in response to a change in prompt.

Slowly this kind of machine understanding would not only be constrained to text and images, but would undoubtedly expand to other forms of communication.

This was just warming up. I showed this to some of my friends and they gave me several queries to try. I will be writing about them in a future follow-up post, as this one's getting long.

Some distressingly dark, some insanely funny and some utterly disappointing. Most of them are fascinating, many are mundane. While some of them are exactly what we expect, the others blow us away.

- But all have one thing in common, an expression of awe upon being seen. Some of them aren't even extraordinary. But the way it shows a language model can combine various real world contexts this way shows how far we have come. The search is on. We haven't even scratched the surface. But that doesn't mean the dent we made is not significant.